by Robin Rowe

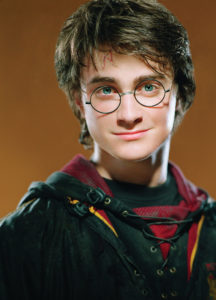

Harry Potter and the Goblet of Fire, the fourth movie in the Warner Bros. series based on the popular books by J.K. Rowling, features Harry and his Hogwarts friends taking part in a competition with two rival schools of magic. As part of the Triwizard Tournament, Harry must grapple with a giant, fire-breathing Hungarian Horntail dragon and battle underwater demons in the Black Lake. For the underwater sequence, the Britain-based production constructed the deepest underwater filming tank in Europe and the actors learned to scuba dive.

Post-production—which took place across the globe, but mainly in London’s Soho Square near De Lane Lea Studios, where the final mix was done–was no less daunting. “It’s Friday night in London and it’s quite a good feeling; we’re getting near the end of this film!” claimed editor Mick Audsley, who was in the midst of his final pass edit on Goblet of Fire when he spoke with CineMontage. “We had a very long shoot–52 weeks. That’s a 70-week schedule for me.” The scale of the whole production was enormous; 1.9 million feet of film was shot. Audsley says they had so many Avids he was tripping over them (the film used five Avid systems connected with shared storage).

Audsley, the editor of such movies as Proof, Twelve Monkeys and Dangerous Liaisons, had previously worked with Goblet of Fire director Mike Newell on several other films, including Mona Lisa Smile, Dance with a Stranger and Soursweet.

“Because I’m an old-fashioned editor from a Moviola background, I still mark up my own script and make copious notes as material comes in,” confesses Audsley. “I keep a diary of daily material as it arrives. The script supervisor’s notes help a bit, but I do them over myself to learn the film. Nobody understands the movie like the editor—if he’s done his job properly. I need to have encyclopedic knowledge of what’s possible with the movie.”

Largely visual effects films like the Harry Potter series are a team effort, according to Audsley, who says that scenes must be committed early so the visual effects houses can get to work. “You have got to get the edit right early on,” he stresses. “We’re making decisions on a scene when we don’t have the scene before it yet. It’s a month later when I start to see dailies, and it may be another nine months until I can see the scene in its entirety!”

Every element was filmed separately in the effects shots. “An underwater scene may have two actors in the tank; that and a shot with a ghost absent may be filmed the same day,” Audsley explains. “Later, the ghost will be shot against green screen. For eye line, there’s a diver off screen for the actor to react to. Or, if it’s a close-up, there may be a green stick that gets painted out later. The human performance is of prime importance at the first stage. The way those performances build and work is the most important thing in the cut.”

Audsley did not cut scanned storyboards together into previsualized story reels, but edited in a more traditional process by building the movie sequence-by-sequence, reel-byreel, as footage came in. A sequence (or scene) is two to three minutes long. A reel is 20 minutes in length. Goblet of Fire is eight and a half reels.

Turnaround on the dailies was pretty quick. Film shot on a Monday was processed overnight at the lab. “On Tuesday, we synched sound and picture together,” says Audsley. “In the Avid, we lay audio tracks down per the physical reels. The audio is positioned to match the claps in the Avid and exported to an Akai system that lays sound on hard disk.” There is no picture in the Avid because the film hadn’t been to telecine yet. It’s a mag-less sync projection system with film dailies. “Seeing dailies screened as film rushes is a luxury of doing a bigger-budget film,” adds Audsley.

On Tuesday night, it was back to the lab for telecine. That arrived on Wednesday as beta tape. Audsley’s assistant Ben Kenton catalogued it and put in the Avid bin. “Once it’s all been logged by the cutting room, it’s presented to me for editing,” says Audsley. “Usually, I work over the edit four or five times until I’m comfortable showing it to Mike.” Director Newell may have seen it the following Monday.

filming tank in Europe and the actors learned to scuba dive. Courtesy of Warner Bros.

So Audsley could focus on keeping the whole picture in his head, the sequences from the various effects houses used were channeled through visual effects supervisor Jim Mitchell, formerly of Industrial Light & Magic (ILM), where he had worked on such films as The Day After Tomorrow, Harry Potter and the Chamber of Secrets and Jurassic Park III. Mitchell supervised 1,200 shots and coordinated nine outside effects facilities around the world.

“Bringing Harry Potter effects to film was a tried-and-true process,” says Mitchell. “It all starts with the book, of course, and then the script. Theresa Corrao, the visual effects producer, and I then broke down the script and identified all possible effects shots. Then I worked with Mike and production designer Stuart Craig to conceptualize the sequences with artwork and storyboards. We then took the storyboards of certain sequences, such as the dragon and underwater, and gave them movement by pre-visualizing them.” In selecting the visual effects houses for Goblet of Fire, Mitchell said he considered many aspects–capacity, previous work and, most importantly, enthusiasm and talent.

“We did the dragon and the World Cup sequence near the beginning of the movie,” notes ILM’s visual effects supervisor Tim Alexander, whose credits include Sky Captain and the World of Tomorrow and Hildalgo. “For the previsualization of the World Cup sequence, we cut together storyboards with Final Cut Pro—basically took stills cut to the length of each shot.” Alexander chose Final Cut Pro [FCP] because it was convenient early in the process—and because it was what he had on his laptop. “Apple Motion could be cut right in the FCP timeline,” he says, explaining that Motion was used for most of the overlays because it was better for titles and burn-ins. “We created a Maya virtual environment of the stadium,” Alexander continues. “The pre-visualized version was a much lighter version of the later Maya model. By starting in Maya we gained a lot of ground, especially camera moves.” The big crowd scene with 80,000 fans was done using motion capture and simulation. “We got a few volunteers in blue suits and captured them sitting down, standing up, cheering, doing the wave and pointing,” he explains. Using an internally developed Maya plug-in, an artist set the crowd “excitement.” The software grabbed random models and heads, and had “targets” so that the crowd follows the quidditch ball.

ILM has its own editing tool called ffc (fast fine cuts) that enables artists to cut in a shot instantly. “Using ffc the artist can view each take,” explains Alexander. “It’s a simple tool to adjust cut frames and re-export as a single QuickTime movie file.” The overall edit was done in Avid. For San Francisco-based effects house The Orphanage, Goblet of Fire was a return to a much more traditional film process after such high-definition productions Sin City and Shark Boy and Lava Girl,” according to the company’s head of editorial, Carl Walters.

“Final Cut Pro is our NLE [nonlinear editing] tool of choice at this facility,” he says. “It’s what we use to pump out video resolution QuickTime files as dailies.” Final Cut Pro isn’t attractive cost-wise. “Using FCP fits in the philosophy of The Orphanage, where the solution is more about what the operator brings,” says Walters.

“We really like After Effects as a compositing tool,” adds The Orphanage’s associate visual effects supervisor Kevin Baillie. “We made a real effort in Goblet of Fire to do everything in gamma 1.0 linear color space. We render OpenEXR out of all our applications–texturing, lighting, rendering.”

“A lot of over-bright color intensity was used in Goblet of Fire,” says Walters. The Orphanage created OpenEXR images for the film with RGBA, Z, and V channels. The Z channel is Z-depth (distance). The V channel is a velocity vector to use for motion blur in post.

The OpenEXR image file format, created by ILM and NVIDIA, is a high dynamic range (HDR) format standard in which pixel brightness can surpass 1.0. HDR is important for visual effects because, while the pixel brightness of a white sheet of paper may only reach 1.0, the sun and other rendered light sources can be much brighter. Clipping a pixel to 1.0 too early in the pipeline can result in muddy-looking colors later if image processing in the pipeline needs to reflect the over-bright color intensity.

The Orphanage also implemented a clever software plug-in to extend After Effects to work in HDR called eLin, a 16-bit integer format with a range of 0 to 13.0. Using eLin enables After Effects to emulate real-world lighting, motion blurring and perception in two- and three-dimensional composites. After a conventional 1.0-based pixel is converted into 13.0-based eLin, it should appear quite dark, but the eLin software compensates using the After Effects 6.5 guide layers to map an LUT (look up table) so the preview looks right. After Effects works unaware that it’s in HDR space.

“At Rising Sun Pictures, the bulk of our work revolved around the film’s Goblet of Fire and the age line surrounding it,” says Rising Sun Pictures visual effects supervisor Tony Clark, who also worked on Sky Captain and the World of Tomorrow. Rising Sun Pictures is based in Adelaide, South Australia. The applications used on Goblet of Fire included Maya, Houdini and Shake, with rendering in 3Delight—all on Linux.

“Executing this kind of work out here would have been unthinkable only six years ago,” explains Clark. “People might think that working with a company half way around the world is a problem, but it can be an advantage. We turn most things around for next-day reviews because we take notes at the end of the production’s day and implement them during our work day to return to production next morning [London time]. Everyone is seeing the same movie clips or images at the same time in cineSync [a QuickTime dailies system developed at Rising Sun], and can point, draw, etc., on the image.”

The titular Goblet of Fire had a range of specific actions that it needed to perform, but it also had to spend a lot of time sitting there and burning like normal fire. Its behavior precluded using a real flame composited into the Goblet. “The flame ended up being created completely in 3-D as 2K and 4K elements using fluid dynamics techniques,” reveals Clark.

“Our most intense individual shot was the flaming bird that confronts Dumbledore,” he says. “This came into play quite late in production and was a result of some brainstorming of ideas between Mitchell, Corrao and our team for what might happen at that moment of the film. The plates were already shot for this, so whatever we did had to fit in with the existing actions.”

Ultimately, there’s a paradox for editors with big visual effects movies. The fact that the movie takes longer forces the editor to work faster. Because of the visual effects lead time, Audsley had to commit his edit decisions sooner and hope they would work. But it isn’t until the effects shots come back months later that he can feel comfortable that he made the right choices. “There isn’t time for big changes later,” explains Audsley in conclusion. “It’s like a bungee jump—once you jump, you have to go with it until it bounces you back.”