By Mel Lambert

Today’s cinema and home-theater audiences have come to expect heightened realism, which means some form of immersive soundtrack using height channels to create a 3D experience. Dolby Atmos is the most prevalent format for creating that “being-there” feeling; the format can handle up to 128 discrete objects for routing to horizontal and height playback, and is available theatrically or via streaming and Blu-ray media.

But additional tools are needed to create realistic ambiences during both editorial and re-recording. Here, we consider products from three of the leading developers of processor and plug-in design: Sound Particles, iZotope/Exponential Audio and Liquid Sonics.

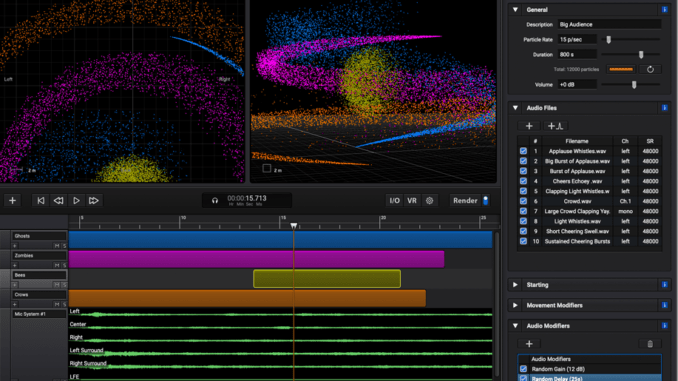

Sound Particles, based in Portugal, offers a stand-alone ambience processor and several DAW plug-ins. In essence, the SP processing engine runs a complex algorithm that generates several thousand sound particles or objects. These represent the audio signals that would be produced within a complex three-dimensional environment, and captured by a virtual microphone, taking into account propagation effects such as Doppler or distance attenuation. For complex scenes, the creative process may not run in real-time, often requiring a couple of minutes for complex scenes, although users can audition audio results while they are being rendered.

Unsurprisingly, one TV show whose sound editors have been using Sound Particles is “Stranger Things,” the sci-fi horror series now in its fourth season, and which is currently being streamed on Netflix with a Dolby Atmos 7.1.4-channel soundtrack, and available on Blu-Ray Disc.

According to dialogue supervisor Ryan Cole, MPSE, “There is a scene in [Season 4] Episode 3 where the main villain, Vecna, is searching the minds of the Hawkins townspeople. We had our loop-group team give us dozens of ‘thoughts,’ which they said out loud. We then edited these into individual files and fed them into Sound Particles. We tweaked certain settings in the FIRE preset, width, speed, pitch and number of particles. From there we output a couple of 7.1.2 beds – one for adults, one for teens – for our mix session, and continued to edit it to our liking. We provided the tracks as a layer in the edit session for Mark Paterson, our dialogue and music re-recording mixer, who would really make the mix sound immersive.”

Lead FX editor Angelo Palazzo, MPSE, revealed that he used the process “early on, when we got a first look at the DemoBat Swarms. I first recorded a series of wing flaps using leather jackets and anything ‘fleshy’ sounding, like heavy cloth and canvas bags. After loading the best parts into SP, I started with presets like ‘Flying Out of the Cave’ and ‘Flying By,’ and tweaked them until I got the right vibe and texture. Those ended up being the Swarm layer that we used any time the bats would attack and surge, and when Eddie gets engulfed by them [in S4E9].”

But the editor leaves all his units in either mono or stereo, “so we do not tie up our mixers’ hands; we like to leave them as much flexibility as possible to move sounds around,” Palazzo said. “With the bats, I’ll make some new sounds and pan them just to get things in the ballpark; Craig or Will can undo anything if they need to.”

Reportedly, Sound Particles processing was used on HBO’s “Game of Thrones,” as well as the soundtracks for “Frozen 2,” “Aquaman”and “Ready Player One.” SP-based workstation plug-ins include Space Controller, which allows a smartphone to deliver panning information to an Atmos immersive mix; Energy Panner, which is controlled by the intensity of a sound; and Brightness Panner, which uses frequency instead of sound levels.

During sound design for director Brett Morgen’s “Moonage Daydream,” an upcoming theatrical documentary about the singer David Bowie, dialogue/crowd editor Jens Petersen, MPSE, used Sound Particles as an SFX editor to enhance the live audiences. “Because of Covid restrictions,” Petersen explained, “we had to use individual microphones for each of the 10 crowd artists singing along with the 15 original Bowie songs. To create different layers, we made up to 12 recordings of each song, using multiple artists, and input these into the Sound Particles processor [with 3D models of Bowie’s concert stadiums]. We had maybe 120 individual tracks per song plus stereo tracks of medium and far perspective recordings, plus up-high ambience.” The processed 7.0-channel results with up to 5,000 virtual audience members were turned over to supervising sound editors John Warhurst and Nina Hartstone.

“We’ve been developing methods to create this type of singing-crowd recording since ‘Les Miserables,’” John Warhurst recalled, “including the much talked-about ‘Bohemian Rhapsody.’ Now, Sound Particles lets us create a huge crowd by multiplying recordings; the end result sounds absolutely real! It’s a huge manipulation but sounds authentic without artifacts. On ‘Moonage Daydream,’this has raised the bar in terms of what’s possible in Atmos immersive soundtracks.”

“The results in Atmos were magical,” Petersen said, “with so much energy from the virtual audiences. But to create that enhance realism – and put each song line in its own space – meant that we needed a lot of crowd inputs sources. Our ‘Secret Sauce’ was the energy we captured during those conducted audience performances.”

Re-recording mixer Tom Marks, CAS, has used the iZotope Stratus 3D by Exponential Audioplug-in and its antecedents for several years to provide realistic reverb and ambiences for immersive soundtrack projects. “For me, theatrical-release Atmos is a very revealing playback format,” he said. “With enhanced bass management and multiple full-range speakers around the audience, I need high-precision reverbs that blend naturally with the source. Stratus 3D sits across all of my surround reverb busses and delivers outstanding fidelity.”

Recent projects include director Roland Emmerich’s “Moonfall,” which features scenes set in outer space after a strange force has knocked the Moon from its orbit and sent it on a collision course with the Earth. “I needed a reverb to seamlessly extend the ambience on the music tracks, for example, using some of the halls in Stratus 3D,” the mixer said.

Marks also used its companion plug-in, Symphony 3D, on dialogue, ADR and group. “With all of the on- and off-Earth locations, Symphony 3D makes it easy for me to put the dialogue into a space that’s believable.”

For “The Watcher,” a new Netflix series that will stream later this year with an Atmos soundtrack, Marks utilized Stratus 3D. “Using various room, chamber, and hall presets, I placed the dialogue and source music throughout the environments in highly realistic ways that served the story,” he said. “The score arrived as stereo stems and the source music as stereo mixes. I panned those tracks, sweetened them with a few instances of Stratus 3D and then routed the plug-in’s 7.1.4 output to the Atmos bed and objects.”

Music scoring mixer Dennis Sands, CAS, operates his own Atmos-compatible mix stage, Sound Waves SB, in Santa Barbara, north of Los Angeles. His most recent project was the score for director Robert Zemeckis’ new live-action feature, “Pinocchio,” scheduled for streaming in early September via Disney+. “Exponential Audio was one of the early developers of useful reverb plug-ins,” he recalled. “Stratus 3D and Symphony 3D are versatile, elegant and robust, and now sonically better than earlier versions. While Stratus is an effects-type plug-in, Symphony is more of a ‘traditional,’ Big Hall-type of plug-in. For synth pads and similar elements, I use tighter reverbs to give a sense of depth; I use Symphony on orchestral blends. They both offer a large array of presets that I use as starting points.”

Sands delivers 7.1-channel stems to the dub stage. “Music re-recording mixers, I find, prefer to develop their own Atmos objects inside the Pro Tools session or, more likely, the Dolby RMU [Rendering and Mastering Unit]. Time is always at a premium and those creative Atmos decisions need to be made on the stage. But if the studio asks me to prepare a separate immersive music mix for soundtrack release – as I did on the Disney’s ‘Dr. Strange’- I’ll output an Atmos 7.1.4 mix using the Dolby toolset to elevate elements as height objects. Stratus 3D does an excellent job of creating cohesive ambiences that bring a mix to life.”

Music scoring mixer Alan Meyerson has been using Liquid Sonics Cinematic Rooms, a surround/Atmos reverb plug-in, on several recent projects, including the energetic score for director Denis Villeneuve’s film, “Dune,” by composer Hans Zimmer, working with supervising music editor Ryan Rubin. “Cinematic Rooms has become my go-to reverb for 5.1- or 7.1-channel music mixes,” he says. “It is a great-sounding algorithm with an easy-to-use interface that offers full control of the ambiences I need to locate the music mixes, with full control of room size and decay [parameters]. Although Cinematic Rooms supports up to 7.1.6 formats” – Pro Tools currently offers a maximum channel width of 7.1.2, plus three stereo auxiliary outputs provided by the plug-in – “I do not deliver Atmos objects; these will be developed on the re-recording stage.”