by Joseph Herman

Recently, Adobe unveiled important updates and enhancements to its suite of video applications in the Creative Cloud. Let’s take a look at some of the new features aimed at filmmakers and video professionals.

Adobe Sensei

According to Adobe, Sensei is an important new technology that drives many new and exciting innovations to its products now, and in the future — but what exactly is Adobe Sensei?

Artificial intelligence is a term that is not exactly new; it’s been around for decades. However, recently it seems there’s been a lot more talk about it. Also known as “machine learning,” this technology is poised to deliver amazing advancements in all kinds of applications, industry pundits tell us.

Products and technologies such as Amazon’s Alexa, self-driving cars, robotics, games, social media, retail stores, finance and even health care all use and have benefited largely from artificial intelligence. In case you’re concerned, intelligent machines aren’t going to take over the world and make you an energy pod. At least not yet. Although AI will allow computers to learn from and anticipate your actions so that, over time, they get “smarter” at the tasks you set them to do.

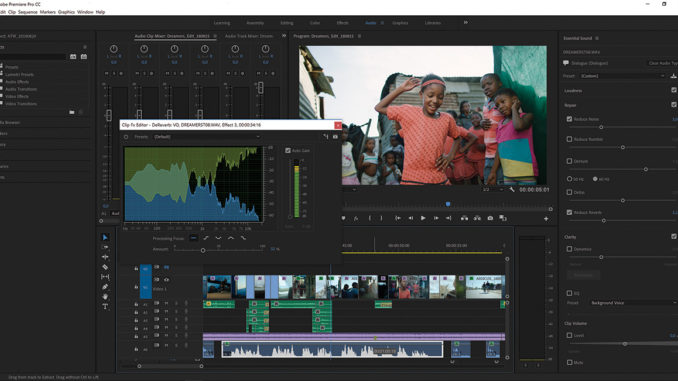

Adobe Sensei is Adobe’s implementation of AI into the Creative Cloud. According to Adobe, it helps do things like automate repetitive tasks while allowing you to maintain creative control. It already exists in several features, for example Auto Ducking (automatically lowering music levels during spoken dialogue). Other Sensei features include matching color between shots and searching for specific images in Adobe Stock. The new release of Creative Cloud has new Sensei-enabled features such as intelligent audio cleanup tools and ways to transform art into animated characters. Keep an eye out to what extent Adobe Sensei will continue to enhance products in the Creative Cloud in the future (see Figure 1).

New Features in Premiere

As I’ve written about previously, the past few years have been good to Premiere. Buoyed by its ubiquity (since every Creative Cloud subscriber automatically owns a copy), and the fact that Adobe diligently continued to develop its professional features, it has risen in popularity. Today it is used by production companies, advertising agencies, television stations and independent editors around the world. Premiere is well known as being format-friendly and cooperates well with its Creative Cloud brethren such as Photoshop, Illustrator and, most importantly, After Effects. All of these reasons have contributed to Premiere Pro’s success.

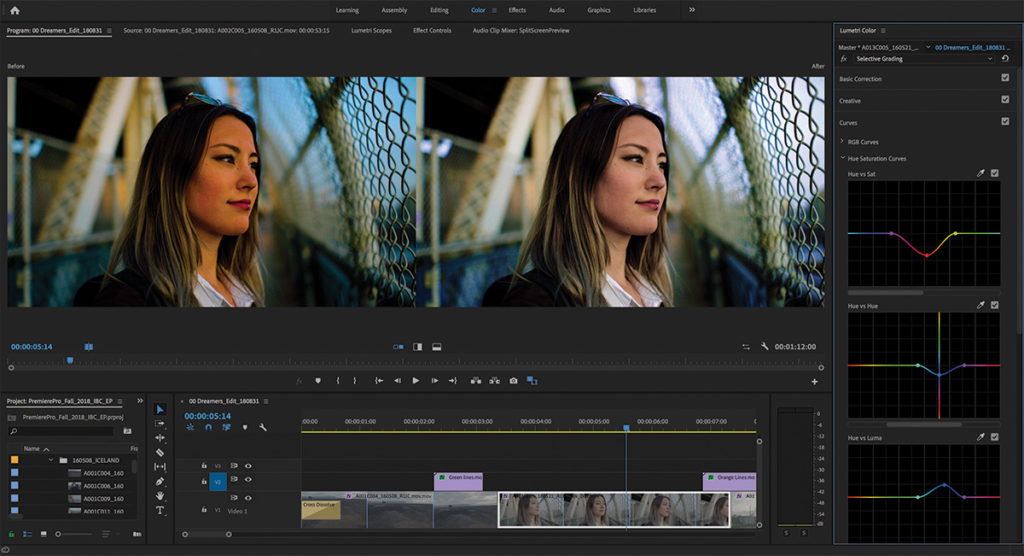

Let’s start with color grading in Premiere Pro. Soon after Adobe acquired SpeedGrade’s color-grading software, they went to work implementing a subset of its sophisticated color tools directly into Premiere Pro. The result was the Lumetri color palette, which gives users a remarkable amount of color-correction tools within Premiere Pro (without having to roundtrip to separate color-correction software). Along with things like color temperature, contrast and brightness, curves, and primary and secondary corrections, you could make selective color adjustments to a specific range of colors that you want to adjust — for example, skin tones.

Previously, this was done with a single Hue/Saturation tool. However, in the new version of Premiere Pro, selective color grading has been expanded with new tools that offer greater precision and more control. This was done by pairing values such as Hue vs. Hue and Luma vs. Saturation on two (horizontal and vertical) axes (see Figure 2).

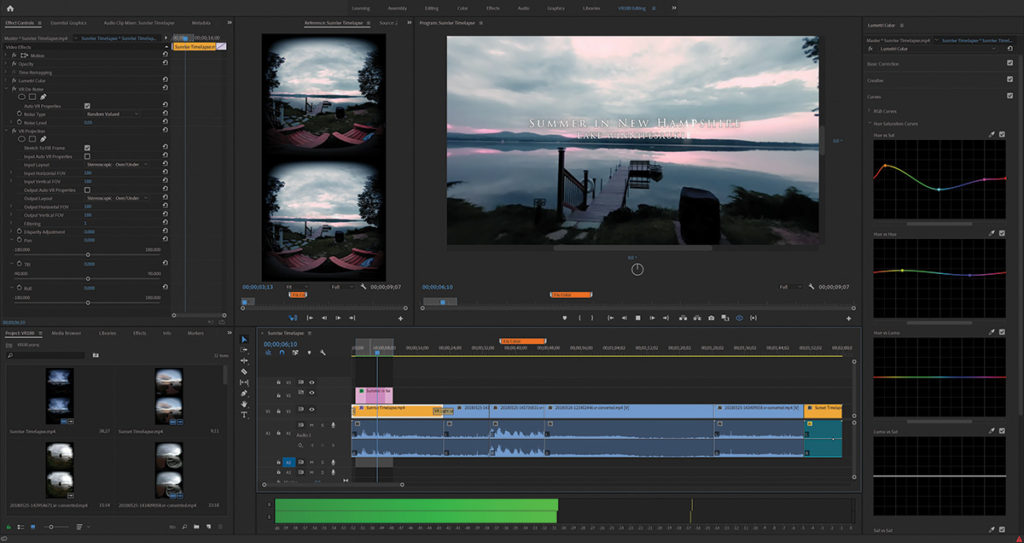

Premiere Pro now has tools to create end-to-end VR180 video. “Wait a second,” I hear you say, “don’t you mean VR360 video?” No. In case you haven’t heard, Google has introduced a new VR format called VR180. Basically, it sounds kind of like what it is, a video that contains half the field of view of a 360-degree video.

Most of us have heard about or experienced 360 VR video (or immersive video). Whether you have donned a VR headset at a trade show or on a home entertainment system (or stuck your phone into a cardboard viewer), VR or 360 video allows you to turn your head in any direction to witness what is happening around you.

However, much of what you may be interested in viewing often doesn’t exist behind you at all, but rather in a 180-degree field in front of you. Consider a concert, for instance. You might want to watch the lead singer, then shift your gaze over to the lead guitarist. After a while, you might want to check out the drummer. Only seldomly, if at all, would you want to turn and face directly behind you. In fact, if you did that at a real concert, you would not only look weird, you might miss something important.

The VR180 format acknowledges this by doing away with the non-essential and restricting the viewing angle only to the 180 degrees in front of you rather than a full 360-degree immersion. It is also a 3D stereoscopic format. With VR180, not only are you less distracted by things you probably wouldn’t want to watch in the first place, but there is half as much data to store and process, relieving the burden on your system’s resources (see Figure 3).

Premiere Pro now includes support for VR180, including optimized ingest, editing, and effects for both monoscopic and stereoscopic VR180 content. When you’re finished editing, you can output in the Google VR180 format so your creation can be viewed on YouTube or other VR180 platforms.

Like Premiere Pro’s previously existing support for VR360 content, you can view 180 media on your screen (without a headset) as flat equirectangular images or in rectilinear VR view. Or you can work while donning a VR headset in Adobe’s Immersive Work Environment.

Speaking of the Adobe Immersive Environment (180 or 360), the new version of Premiere Pro now allows you to place spatial markers in your media while working with a headset so you can easily find those areas when you return to the desktop timeline. Also available in the new version of Premiere is a new feature called Theater Mode, which Adobe describes as a portable reference monitor within the Adobe Immersive Environment. According to the company, Theater Mode allows for watching other content than just VR in the headset. This could be 2D content — hence the name “Theater Mode,” which is akin to seeing something on a big screen. You can also mix that mode with VR, allowing you to walk around the screen or view it from different angles.

Other features in the new version of Premiere Pro include hardware-based encoding and decoding for H.264 and HEVC, which means less waiting around for your computer to render. Image processing has also been improved, allowing for faster and more responsive rendering and playback, including when using Lumetri Color. There’s also new native support for ARRI Alexa LF (Large Format), Sony Venice V2 and HEIF (HEIC) used by the new iPhones. Another nice touch is visibility for legacy QuickTime files in your project.

Premiere and After Effects

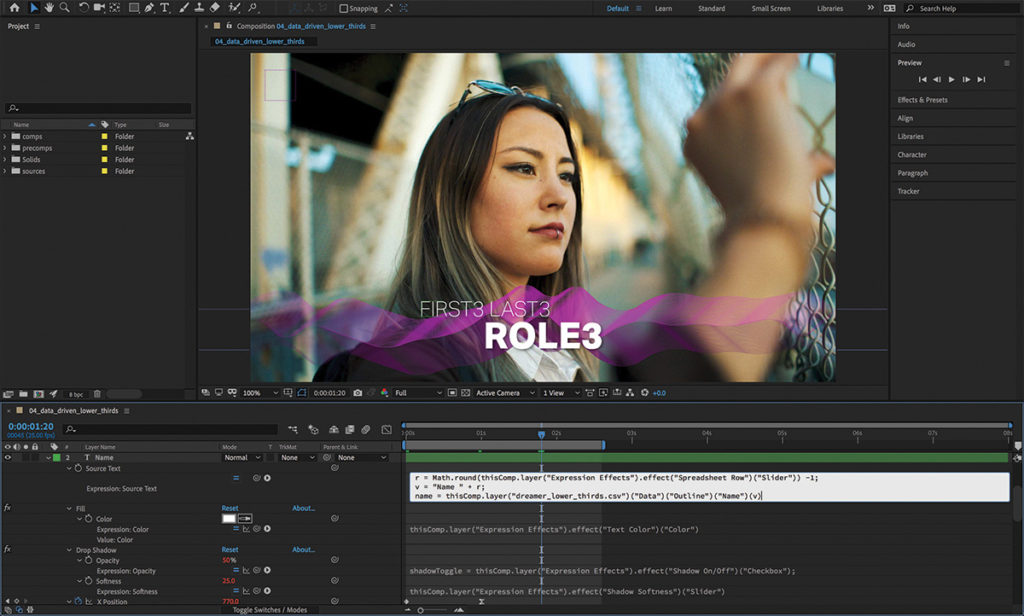

Also new in Premiere Pro are improved workflows for motion graphics created in After Effects. As we know, After Effects projects can be imported directly into Premiere — keeping things editable while eliminating the need for intermediate rendering between the two applications.

However, After Effects now allows designers to encapsulate complex After Effects projects into single files, also known as a motion graphics templates, with simplified controls for customization in Premiere Pro. When authoring a motion graphics template, After Effects artists can organize their editable parameters into groups with custom headings and twirl-downs (for showing or hiding sets of controls). These will appear in Premiere Pro and will grant editors access to things like fonts and effect parameters (see Figure 4).

Responsive Design allows you to preserve the temporal integrity of keyframes. For example, there may be portions of a motion graphics sequence where the timing of the animation must not be tampered with and stretching or shrinking the overall duration of the sequence would mess things up. Responsive Design allows you to protect designated regions, such as intros, reveals and outros.

Besides the customizable motion graphics templates is the capability for data-driven infographics. This allows designers to create slick-looking charts and graphs in After Effects without the need for precise data. Later, editors can drop spreadsheet files on the templates inside of Premiere and have the infographics accurately reflect that data. In addition, updates to the spreadsheet itself will automatically update the graphics.

Other After Effects Enhancements

The new version of After Effects includes advanced puppet pins for working with meshes. Advanced pins now allow you to define position, scale and rotation, giving you significantly more control on how a mesh deforms around that pin. Bend pins let you to create organic movements within a design, allowing you to create effects, such as a breathing chest or a wagging tail.

Depth passes are another new feature in the new version of After Effects. They allow you to position objects quickly and easily in 3D space for 3D compositing workflows. Use them to make 2D layers look like they are part of a 3D scene in software such as Maxon’s Cinema 4D.

The Mocha plug-in for After Effects is now native and GPU-accelerated. Useful for tracking and rotoscoping, Mocha AE is now an integrated native plug-in with a simplified interface.

After Effects also has a new modern JavaScript engine, making expressions easier to create with as much as a five-times-faster speedup. Speaking of speedups, After Effects has faster across-the-board performance improvements and nine new GPU-optimized effects. Like Premiere Pro, After Effects now also supports VR180 content (see Figure 5).

Conclusion

There is a lot more to this upgrade to the video apps in Adobe Creative Cloud. We’ve focused mainly on Premiere and After Effects, since they are the most important applications in the suite. That’s not to say Audition is not important; it is a go-to tool for post-production audio with its own intriguing updates. Adobe Character Animator has also received its share of love. Adobe Stock, as well, has updated search filters and access to millions of curated cinematic 4K and HD videos. But, alas, we’ve run out of space for now.

One of the best things about the new features to Adobe Creative Cloud, however, is that they’ll all be available for download as part of your subscription.